Maybe it’s flying cars of “The Jetsons” fame, or AI that replaces knowledge work in your field. But the future is so expansive — forever-ever? — that it most certainly includes tech we can’t even imagine yet.

To help make sense of what’s coming next, Technical.ly asked three professors at higher education institutions in Philadelphia about their coolest research projects, and their ideas about technology of the future.

VR as an education tool

Nick Jushchyshyn, an assistant professor of digital media at Drexel University and director of Drexel’s Immersive Research Lab, is researching the use of virtual reality, immersive reality and the metaverse for filmmaking and educational experiences. He focuses specifically on graphics and interaction in this technology.

He works with “3D graphical interactive environments” and most recently, Jushchyshyn’s work has focused on turning real world spaces and people into virtual spaces and people that can explored and interacted with using VR or web navigation.

Nick Jushchyshyn. (Courtesy photo)

“You’re taking real people and making digital representations of them that are fully interactive,” he said, “and can be manipulated either with motion capture technologies or driven through machine learning systems, so that we can create a more naturally interactive environment in our virtual spaces.”

The goal is to make these experiences more accessible and affordable to more people.

For Jushchyshyn, the most practical application of this technology is for education facilities, such as labs with specialized equipment. Once a virtual lab is created, it can be copied and used multiple times in the digital world. Easily accessible virtual spaces also eliminate the barriers of travel along with the costs and environmental impacts that come with travel.

“The technology of the future should make advanced learning more accessible, and the ability to practice advanced skill sets more readily accessible to broader sets of communities, and economic accessibility,” he said.

Jushchychyn said VR has been around for about 50 years, but it hasn’t been accessible because it was extremely expensive. Now that VR is more affordable at a consumer level, it opens the doors for more experiences and uses.

The technology of the future should make advanced learning more accessible, and the ability to practice advanced skill sets more readily accessible to broader sets of communities, and economic accessibility.

Technology like this is already being used in nursing and engineering education at top institutions (and in Pittsburgh, for robotics manufacturing certification). Jushchyshyn estimated it will be another 15 to 20 years before it becomes standard technology in all education.

“I think that even more exciting is the opportunity to be able to bring these technologies further to the secondary school level and into communities that are typically underserved, where that type of training might not be so readily accessible, and hopefully we can start leveling the playing field when it comes to those,” he said. Combining this technology with machine learning could also allow students to learn from experts and teachers all over the world.

We still have a long was to go to reach those practices, Jushchyshyn said, but that shift has started. The next focus should be implementation and adoption — of virtual and augmented reality, but also immersive tech.

AI to spot deepfakes

Matthew Stamm is an associate professor of electrical and computer engineering at Drexel where he also leads the Multimedia and Information Security Lab. Stamm’s research focuses on a subject called media forensics, where he develops algorithms to judge whether photos, videos and other digital content are real or fake by seeing if they’ve been manipulated.

“The way I go about doing that is by looking for these visually imperceptible traces that are left in a piece of media,” he said. “Most of my research is based in techniques from machine learning and AI. So we develop techniques that can automatically discover these traces because they’re very hard to find.”

One interesting discovery of Stamm’s? Techniques used for fake images don’t work for videos.

Matthew Stamm. (Courtesy photo)

“This is somewhat surprising, because you can think of just a video as a series of images at 30 frames per second, but because of the processing that goes on in video compression and a bunch of other factors, the techniques that we’ve developed for images all just fail on video,” he said. “We’ve developed techniques that can figure out if there’s something fake in a video and where it’s fake. And this is important because most existing research that looks at video forgeries looks only at deepfakes.”

Deepfakes — videos in which AI replaces a featured face with someone else’s — are problematic, but they are not the only harmful fake videos or media in general out there. Stamm said techniques that look for other types of video editing and falsification don’t really exist, so his team is working it.

Nowadays, pretty much everyone has access to tools that can edit images or videos, such as Reface and Avatarify, and not all edited media is bad, like cat memes. But the widespread availability of editing tools means misinformation (incorrect info, like a social media post about a news story that gets the facts wrong) and disinformation (incorrect info meant to mislead, like government propaganda) can be an issue.

“It’s not a problem of preventing access, it’s just is this information that I have access to, is that real?” he said. “Cryptography can’t protect you in those situations, so techniques like media forensics, they’re designed to help us provide information security when we can’t trust the source of information.”

Techniques like media forensics are designed to help us provide information security when we can't trust the source of information.

(Here’s a deeper look at the ethics of using text-to-image AI to make art, and how deepfake technology will add complexity to hiring.)

This research has been going on for almost 20 years now, according to Stamm, who has been in the field for about 15 years. A challenge right now is that these techniques aren’t widely available; his team currently works mostly with the federal government. He thinks in the next few years, companies dedicated to this work will crop up because of the variety of applications.

“We’re working to make tools that the general public and unskilled user can use,” he said. “That might be a little bit further away.”

Long term, Stamm said he’d like to see technology that can analyze larger amounts of media, that can contextualize it to determine if it’s harmful or not. While tech alone may never fully solve this problem, there should also be widespread education about fake media and how it can be harmful, as well — “what to do when you find a piece of media that might be fake, teaching people how not to pollute digital evidence, teaching people how to be smart consumers of information.”

Very tiny robots for surgery

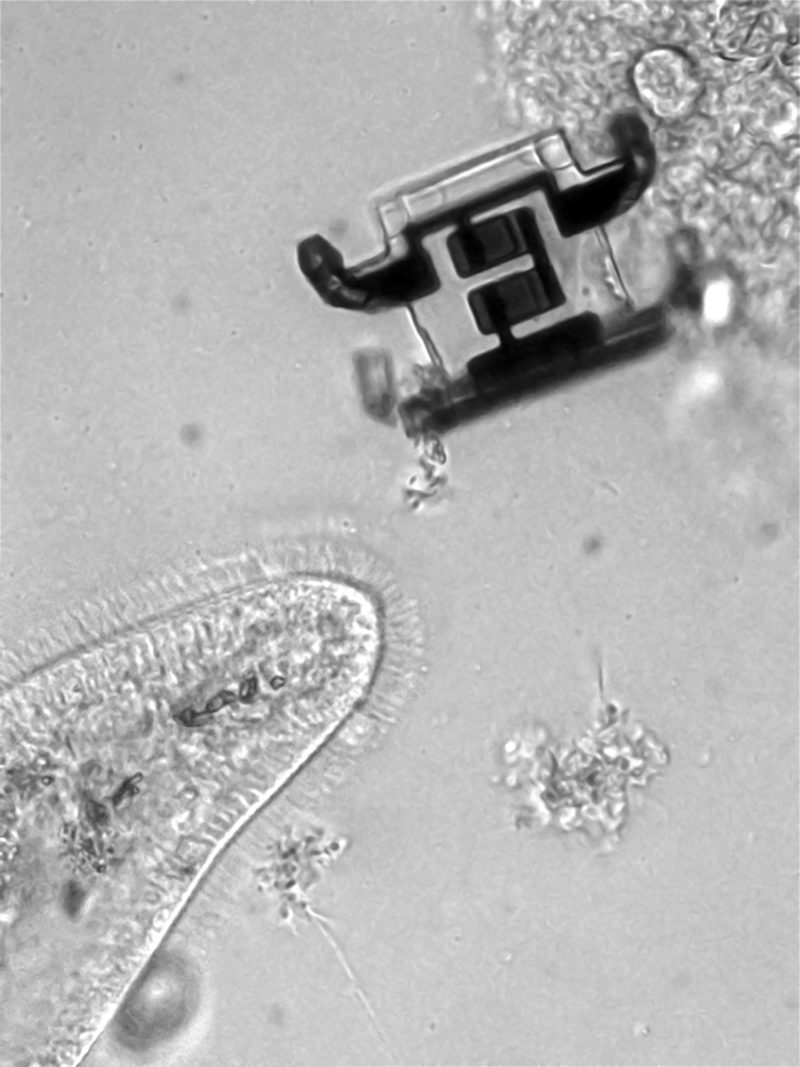

Marc Miskin is an assistant professor of electrical and system engineering at the University of Pennsylvania, whose research focuses on tiny robots. And when he says tiny, he means literally microscopic. But he also means real robots that have computers attached, move on their own and process their own energy. His students are developing nanotechnologies for these robots (they can’t use “off-the-shelf stuff,” the professor said).

Mark Miskin. (Courtesy photo)

How do you actually build tiny robots? Miskin uses tools from the semiconductor industry.

Miskin has been working on this research since about 2014. One project he is currently working on is a robot with a programmable computer on it. His team builds robots in parallel — they’re all built at the same time, the same way. If the robots are programmable, they can each do different things.

“I’m really excited about that one, because once you build that robot, then other people can get into this space without having to learn how to build them,” he said. “You can program them all to be different. You can have thousands of different behaviors, or you can give them away and give them to other people that want to use them for their application.”

What Miskin isn’t completely sure about yet is the practical application of these tiny robots.

You build something fundamentally new and it takes a long time to actually figure out its impact.

“A lot of it depends on on how good the technology really becomes,” he said. “I think there are things that we can do now that are appealing. We have some projects where we’re trying to use them in the body to do surgical tasks.”

Specifically, this research is looking at using robots in the peripheral nervous system. This system cares about tiny structures — individual nerves — that require mechanical force to repair; hence, tiny robots. But Miskin said he doesn’t know how long it will be until we see these bots in regular medical care.

“You have to justify, why do you want it to be so small? And why does it have to be a robot instead of a drug or anything else?” he said. “I think we just don’t really know where to look quite yet because we’re just coming around to the idea of thinking about this as something we can actually do.”

Miskin said he tells his students to think of this research as creating something entirely new that is future focused. Along with that comes a longer timeline before people will realize what this technology can best be used for: “You build something fundamentally new and it takes a long time to actually figure out its impact,” he said.

Sarah Huffman is a 2022-2024 corps member for Report for America, an initiative of The Groundtruth Project that pairs young journalists with local newsrooms. This position is supported by the Lenfest Institute for Journalism.

This editorial article is a part of Technology of the Future Month 2022 in Technical.ly's editorial calendar. This month’s theme is underwritten by Verizon 5G. This story was independently reported and not reviewed by Verizon 5G before publication.

Before you go...

To keep our site paywall-free, we’re launching a campaign to raise $25,000 by the end of the year. We believe information about entrepreneurs and tech should be accessible to everyone and your support helps make that happen, because journalism costs money.

Can we count on you? Your contribution to the Technical.ly Journalism Fund is tax-deductible.

Join our growing Slack community

Join 5,000 tech professionals and entrepreneurs in our community Slack today!

The person charged in the UnitedHealthcare CEO shooting had a ton of tech connections

The looming TikTok ban doesn’t strike financial fear into the hearts of creators — it’s community they’re worried about

Where are the country’s most vibrant tech and startup communities?