It’s been about six months since OpenAI released ChatGPT for public use — a bombshell for technologists, with its relative sophistication and adaptability. Yet for the general public, the artificial intelligence tool hasn’t entirely taken hold.

AI is often viewed as unethical, used for the likes of cheating on homework and creating autogenerated art out of the work of living artists. And as use of it grows, fear is starting to set in that the tech has the potential to be massively destructive in the future. As Technical.ly noted last month, nearly half of 800 machine learning researchers polled last year gave a 10% probability that AI will result long term in an extinction-level event for human civilization; only a quarter said there’s no chance of that at all.

Have humans actually created a predecessor to Skynet, the self-aware, super-intelligent AI system that destroyed most of humanity in the “Terminator” movies?

Probably not. But that doesn’t mean AI won’t be weaponized.

Chris Glanden, creator and host of BarCode, a laid-back cybersecurity podcast out of Pike Creek, Delaware, often chats about AI with his cybersecurity-expert guests from all over the world. When Stable Diffusion for images and ChatGPT for text arrived in 2022, it became clear to him that the general public either had no concept of AI’s threat potential, or they overthought its capabilities.

Glanden saw a need for something that would help a mainstream audience understand AI better. BarCode, as generally accessible as it is, is for an audience that’s into cybersecurity and technology. So he decided to expand BarCode into documentary production.

Chris Glanden. (Courtesy photo)

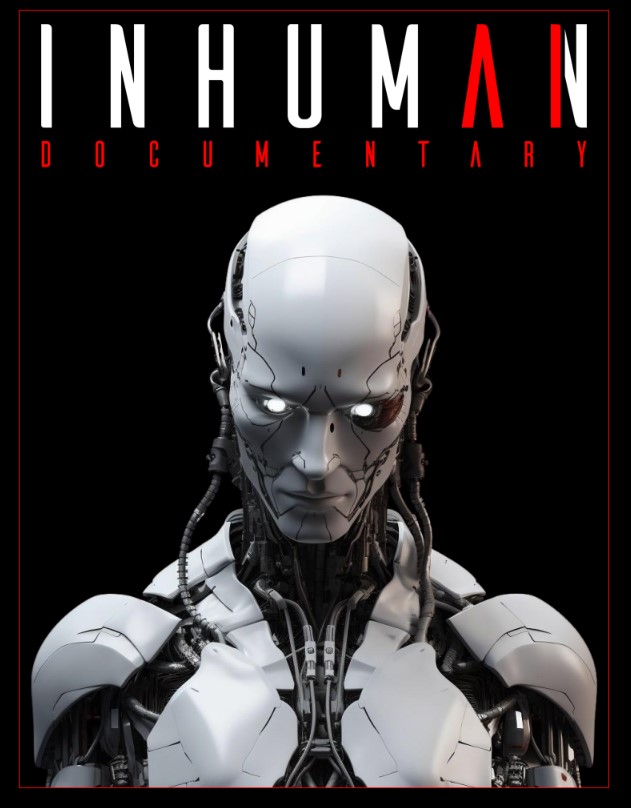

BarCode’s first documentary, called “Inhuman,” will explore AI weaponization, from the technology’s earliest days to ChatGPT.

Glanden and his documentary team plan to film in the summer, primarily in Los Angeles, Las Vegas and Boston, and release by the late fall — an ambitiously fast turnaround necessitated by the speed of AI’s evolution. By the end of the year, Glanden hopes to start screening the film, including in his hometown.

As they gear up to start production, Technical.ly asked Glanden a few questions about the project. This conversation has been condensed for length and clarity.

What is the main goal of “Inhuman”?

Glanden: I want to solve the problem of the misconceptions the public has with AI, in a cinematic format. Think of a Netflix documentary that anyone can turn on, and it hooks you. That’s what I want to do with this. We’re helping to understand AI’s evolution, while also exploring the weaponization techniques and the threats that run in parallel to the innovation we’re seeing before our eyes right now.

It’s not only people in the technology field. Everyone is affected at this point.

Who do you plan to feature?

We’re going to be talking with pioneers of AI — guys that were working for NASA in the ’70s, leading researchers, policymakers, security professionals, people that are on the front line of this. The differentiator that I’m trying to bring is authenticity. My approach is to provide that cinematic portrayal, but our core data and our message is going to stem from those leading individuals. It’s important to put that security lens on it because my whole goal is to make people aware of the threats and be able to either see what’s coming or protect themselves.

What types of AI will you explore?

You’ve seen deepfakes, I’m sure. Deepfake is a type of synthetic media. We’re going to talk about how deepfakes are made, how they could be used to spread misinformation and undermine trust. We’re going to get into voice synthesis, like a deep fake, but you’re using voice. Generative AI, where you’re creating artwork or other things, also falls under synthetic media. Then we’ll get into AI assistants and virtual companions like ChatGPT, robot companions, things like that.

What kind of robotics will you explore?

We’ll get into cyber physical systems. We’re going to talk about computer vision, which is a field of study that focuses on enabling computers to interpret and understand input that humans give. We’re going to talk about autonomous vehicles, and Boston Dynamics, which has a dog robot. And there’s a company called Hanson Robotics that we’re going to feature that has a humanoid robot called Sophia.

What are some dangers beyond deepfakes that people should be aware of?

The dangers of AI misuse in data collection, surveillance, facial recognition, biometric tracking, bias and discrimination. We’re going to get into all of that and then we’re going to end [the documentary] with the future of AI, what we’ve concluded from all of this data.

“Inhuman” is about AI weaponization. How scared should we be?

I’m not here to do fear mongering. But at the same time, we want to portray the realism that AI is bringing, so we want to talk to futurists, we want to talk to people that own AI companies and what their trajectory looks like. And then recap the threats involved and how individuals that are watching can help secure themselves.

Who’s funding “Inhuman”?

I’m bootstrapping everything, which is tough. If people want to find out more about what we’re doing, inhumandocumentary.com is the website with crowdfunding information and sponsorship opportunities.

Join our growing Slack community

Join 5,000 tech professionals and entrepreneurs in our community Slack today!

Donate to the Journalism Fund

Your support powers our independent journalism. Unlike most business-media outlets, we don’t have a paywall. Instead, we count on your personal and organizational contributions.