Right now, it’s something you can’t always see, hear or even really know is happening. But you can find it in credit card checks, housing applications and your Netflix recommendations — and that’s only the beginning. An AI transcription service was even used in the making of this article, which you might have found via a Google News or social media algorithm.

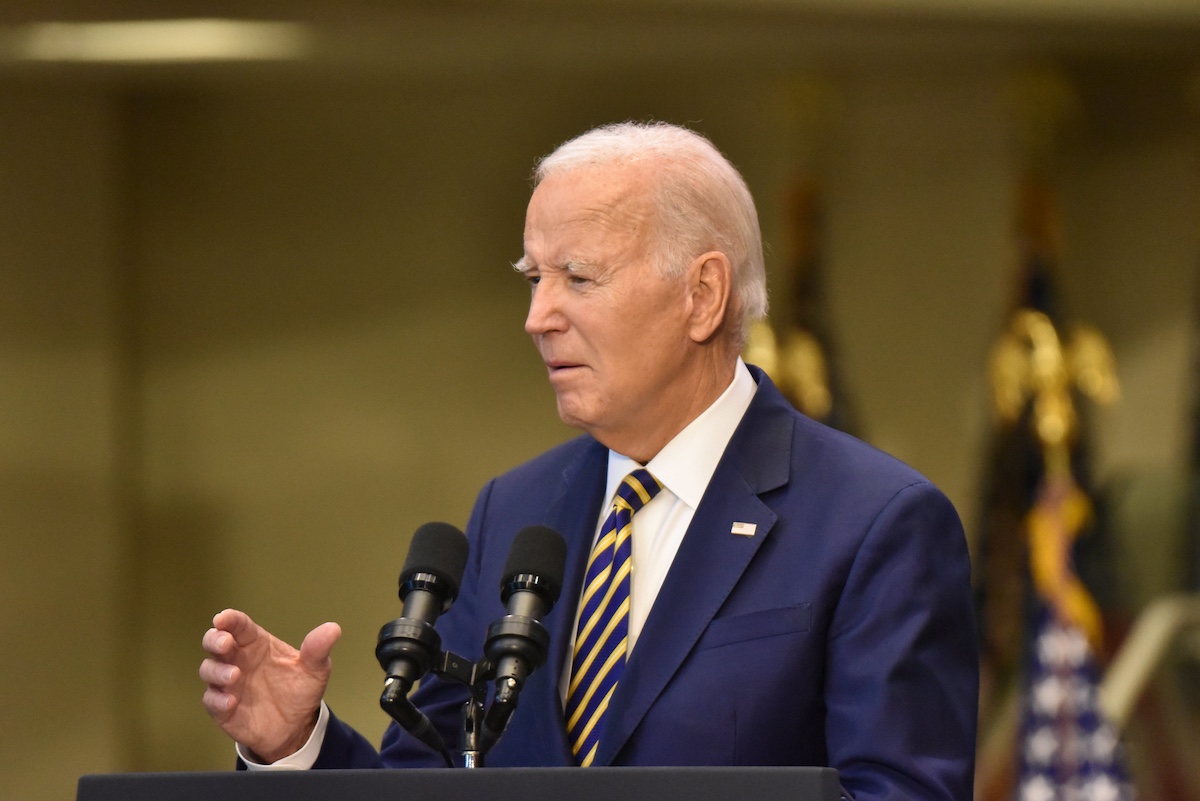

Yet it’s an area largely lacking in regulation. The US made a strong move yesterday when President Joe Biden released an executive order outlining some best practices and suggestions for the industry, in the hopes of protecting citizens and promoting American exceptionalism in the industry. For many experts, it’s a huge step in the right direction.

The executive order outlines a number of recommendations and directives to better regulate the world of AI. This includes:

- AI safety and security standards

- Privacy protections

- Promoting equity and civil rights through AI

- Protections for consumers, patients and students

- Boosting AI innovation

- Advancing American AI work globally

- Responsible government use of AI

Patrick Hall is an assistant professor at George Washington University who also supports AI risk management framework with the National Institute of Standards and Technology (NIST) and works as an AI advisor. For him, this executive order is a key move for the AI industry.

“It’s a big step forward in a long marathon or maybe several big steps forward in a long marathon. It certainly moves the needle,” Hall told Technical.ly. “I’ve been working around AI regulation for the last five years, and this is probably one of the most significant developments, at least in the US, in those five years.”

Civil rights and AI

According to Hall, the use of algorithms in critical decision-making in employment and consumer finance is something that’s been regulated for decades. And he thinks the government does an OK job there already, some of the frameworks just needed a little cheerleading.

But the current problem? Other sectors aren’t regulated nearly as much.

“If an algorithm is used to make a credit decision about you, that’s a highly regulated decision with a great amount of regulatory oversight. If an algorithm is used to score you for criminal risk in a criminal proceeding, that’s not a highly regulated interaction, and that doesn’t make any sense,” Hall said. “I was glad to see, you know, specific callouts for more oversight, more transparency, for use of AI in the criminal justice system.”

Miriam Vogel, president and CEO of DC-based nonprofit EqualAI with a mission to reduce bias in AI, said this order is an important tool to prevent bias in AI and promote responsible governance.

“Actions mandated in EO will be instrumental steps in maximizing benefits and reducing harms from AI in key areas impacting Americans’ daily lives and wellbeing, including housing, health, employment, and education through a whole of government approach to its safe use and equitable application,” Vogel said. “This result will be enabled by the clear direction to each agency in the EO and the coordination by a White House AI Council to coordinate these efforts.”

For National Fair Housing Alliance President and CEO Lisa Rice, the ideal future is a society where technology can help overcome illness, improve education, access housing and credit opportunities, mitigate climate change and, generally, improve life. But right now, many are still denied access to housing, employment, voting, healthcare, education and more because of biased algorithms, she said.

“Without proper protocols, guardrails, and investments, we run the risk of unleashing untested and harmful innovations into our society that can hurt millions of people,” Rice said in a statement. “There is no doubt that technology, including AI and automated systems, is the new civil and human rights frontier.”

Safety first

Another highlight of the executive order is the idea of new standards for safety and security in AI. The order requires that developers of “the most powerful AI systems” must notify the government when they’re training the AI model and share their red-team safety testing results with the federal government. It also calls on NIST to set standards for red-team testing for public safety.

That’s a key point for Hall, who said all of the systems we interact with every day perpetuate social biases and violate privacy. At the same time, he said, consumers often don’t really know that they’re facing these technologies.

“[Many AI systems] are just wrong and broken and are just sloppy products that wouldn’t be accepted in a more mature vertical,” Hall said. “I can’t release canned food that’s dangerous, but it is possible today for any number of companies to release AI algorithms that just aren’t well tested, don’t really do what they say and can potentially be harmful.”

With this executive order, he thinks AI is getting closer to being treated like industries such as cars, airplanes, medicines and food that are thoroughly tested before public consumption.

However, not everyone wants to share training with the government.

Linda Moore, president and CEO of industry group TechNet, said it’s important to preserve core copyright laws that she feels offer technology-neutral safeguards to boost the competitiveness of American AI tech.

I can’t release canned food that’s dangerous, but it is possible today for any number of companies to release AI algorithms that just aren’t well tested, don’t really do what they say and can potentially be harmful. Patrick Hall George Washington University

“The creation of training datasets is a critical element of AI development. However, broad disclosure of training data would undermine the ability of American companies to compete with our foreign adversaries,” Moore wrote in a statement from TechNet. “Forcing AI companies to disclose this information would lessen the incentive to invest in new ways to compile, select, curate and filter training data and would require disclosure of these valuable trade secrets to foreign AI competitors, who are not subject to the same requirements.”

Tapping tech talent

Also included in the executive order is prioritizing new AI talent. At the government level, the order directs government agencies to rapidly hire AI professionals, with agency-provided AI training for all levels of employees. It also calls for addressing job displacement, labor standards, workplace equity, health, safety and collecting data to benefit AI workers. Plus, it calls for modernizing visa processing for immigrants and nonimmigrants with industry expertise.

Laura Stash, executive VP of solutions architecture at Arlington, Virginia tech consulting firm iTech AG, said the order provides a positive outlook for AI procurement, product acquisition and new talent.

“The workforce implications are particularly important — IT teams must understand AI systems before being able to make risk-based decisions, which is why AI talent acquisition is highlighted,” Stash wrote to Technical.ly in an email. “Right now, the current goal for enterprises and government must be to hire, change processes and provide training so organizations are comfortable with and aware of AI, and how it can be used successfully while maintaining security – this includes all forms of AI, like predictive AI, generative AI and human in the loop AI.”

The path forward

For Barry Lynn, executive director of the Open Markets Institute, the executive order is hugely important for identifying the true potential harms of AI, and just how far they reach into many different industries.

“It also demonstrates that our government already has vast existing authority to address many of the most pressing threats,” Lynn said. “Yes, new law will help. But the EO shows how well previous generations of lawmakers equipped the U.S. government to ensure that new technologies work for all Americans, not just the few.”

Hall agreed, noting that while this is just the first step, any rational regulation of AI is likely a good thing. As policymakers take the first steps in following the order, he hopes that it can change the view of AI technology — so everyone can see just how prominent the technology really is.

“We need to stop thinking of AI systems as things that are written about in research papers and things that are in movies,” Hall said. “AI and machine learning systems are becoming big parts of our lives, for better or worse, and one of the minimum things that we could do would be to treat them like other consumer products.”