How can the mainstream adoption of language learning models (LLMs) and other generative AI tools help workers be more productive and efficient in their work?

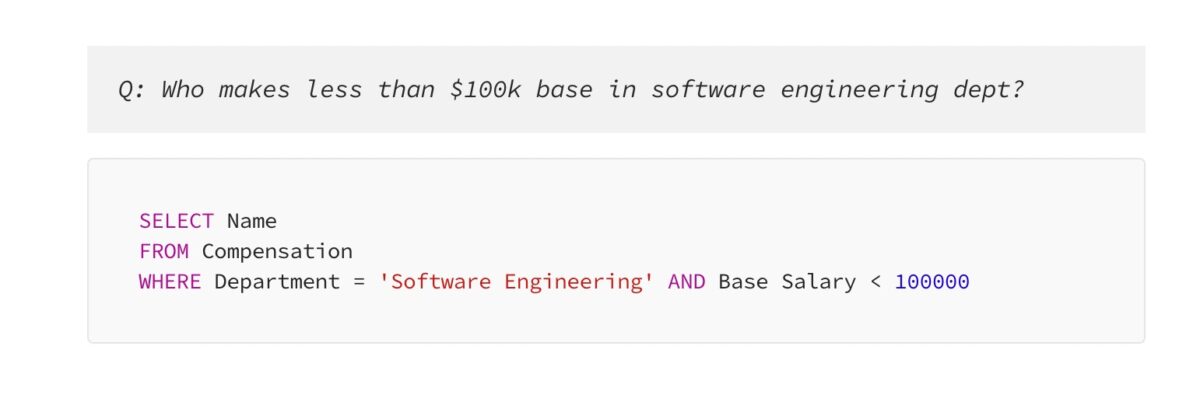

Data analysis is one area where the use of prompts can enable non-technical professionals to work with data more easily, without the need for assistance from developers or data practitioners. This approach can make data analysis more accessible to workers who lack technical skills in SQL or other programming languages.

Leveraging public datasets on top of LLMs models is a powerful way to help workers get answers to questions at scale. For example, HR databases typically contain information on salaries, which is often available in open data served by governments. However, finding this data can be time-consuming and challenging, requiring searching through PDFs, spreadsheets or data portals. By using natural language prompts powered by the latest LLMs, workers can get answers to their queries quickly and easily without the need for technical expertise.

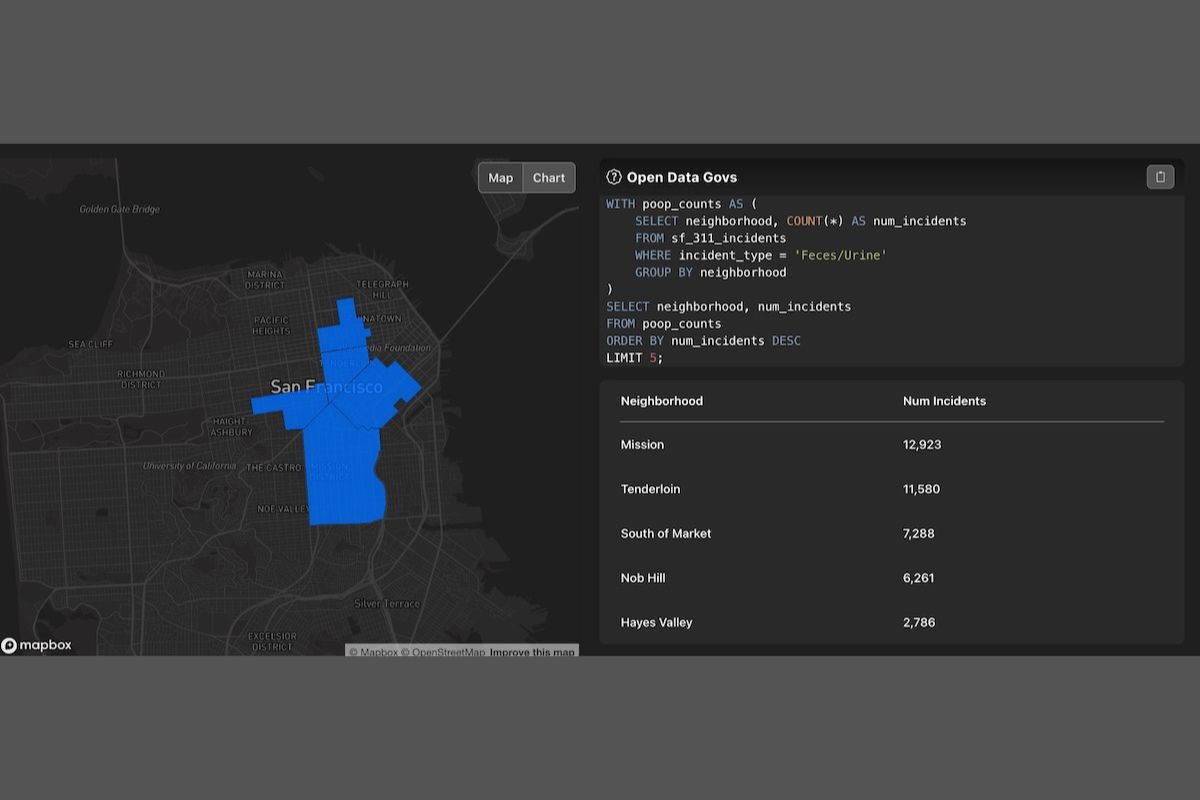

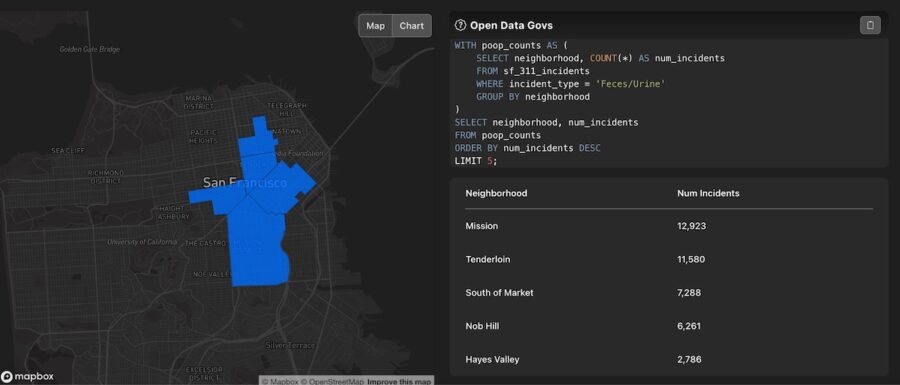

For instance, a natural language prompt can be used to extract information from government datasets, such as the City of San Francisco’s open data portal. By asking a question like “Where are all the poop complaints located in SF?” through a prompt, the LLM interface can provide a table, an SQL query, and a map showing the locations of these complaints. This information can help workers make informed decisions and recommendations based on data insights, such as identifying areas in SF where more public restrooms should be set up based on the complaint data.

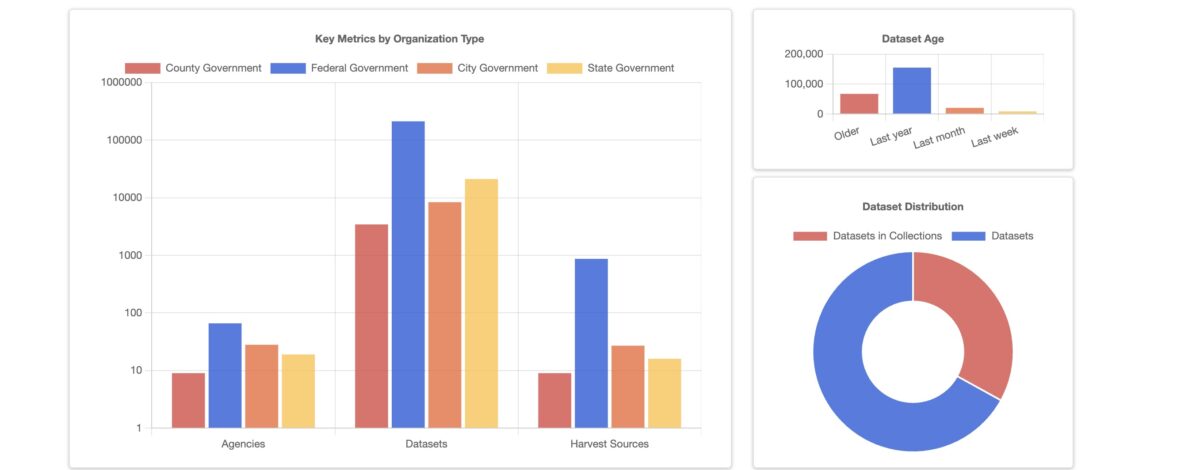

The adoption of generative AI and LLMs in the workforce will undoubtedly create many tools that improve workflows and save time for workers. However, this approach also poses its own set of challenges, such as ensuring that the data used is reliable, high-quality, and not false. Having a common data model and an adequate amount of training data is also essential for effective data analysis. Nonetheless, the availability of over 250,000 datasets on the U.S. government’s data portal provides an excellent starting point for deploying internal LLM apps or testing models with data before using private data.