On an early Friday afternoon, a dozen music technology students at Drexel University’s MET-Lab wrap up lunch before plunging back into work, scattered around computer stations in a cramped fourth floor laboratory.

Youngmoo Kim, a youthful and clean-cut professor, rushes up the elevator of the Bassone Building, on 31st and Market, to meet his students before launching into a presentation about the lab’s objectives, an exciting look at the future of music and entertainment technology, hence the name MET. The five-year-old lab is trying to create new technologies around music production, music recommendation and organization and music interfacing, four cores of its mission. Its most obvious, though, is to teach computers to use sound.

Kim wears a sweater vest and glasses, and at 37 years-old, his demeanor compliments his experience. After growing up in the Midwest playing piano and nurturing a love of music and technology, he’s spent a good portion of his adulthood in the Northeast dabbling in music. He earned his Ph.D. in Media Arts and Sciences from MIT and Master’s degrees in Electrical Engineering and Music from Stanford. He also did a stint at Swarthmore, grabbing a B.A. in Music before heading North.

Kim lets the students show off their projects and reminds them of small details that could be causing bugs, sometimes showing a subtle frustration that is naturally a part of the job. But his dedication is real, proven by the projects that are coming out of MET-Lab, under Kim’s discretion.

“Hopefully we can change the the world of music entertainment 10 to 20 years from now,” he says, rushing across campus to show off another student’s project.

Below, just a handful of the many projects MET-Lab is working on.

Electronically-augmented piano

Post-doctoral researcher Andrew McPherson’s impressive augmented acoustic piano�housed in an upstairs recital hall in a University music hall, would look normal�if not for the electromagnets and ribbon cables pouring out of the piano’s body, hooked up to a black MacBook.

The instrument produces an ethereal sound, one that sounds like its coming from a synthesizer through speakers. But it’s not. The sound is created by the magnets vibrating the strings.

Hear a recording of the electrically-augmented piano below…

[audio:http://technical.ly/philly/wp-content/uploads/sites/2/2009/11/electo_piano.mp3]

Automatic Score Following

Using a iPhone and iPod touch application, audiences of Specticast-broadcast Philadelphia Orchestra concerts�which we’ve covered in the past�are able to follow along with scores, track measures and view details about the pieces.

By comparing pre-recorded audio to the live version of a track to ensure consistency, information is streamed from a central server to users in real-time on their mobile phones while in the audience. The hope is that education can be improved for a dying art form.

Dancing Robot

Dancing Robot

In an attempt to help decipher how the human mind processes the ability to dance and the patterns and movements involved, MET-Lab is working on an electronic dancing robot that interfaces with an application that analyzes music to create dynamic dances. Though the lab demonstrated only a random sampling of the robot’s moves, the figure waved its arms and moved its feet in real-time to a song.

Kim says the lab could someday plug its findings into Drexel’s Hubo, a lifelike prototype in the University’s robotics lab, that Kim estimates cost $500,000.

Multi-touch Display

Multi-touch surfaces, made famous by Microsoft Surface, and more recently in mobile handsets like Apple’s iPhone have been researched for years. But MET-Lab prototypes its own device in-house, using an infrared camera to track finger touches on a glass display, enabling an interface to be manipulated by a large number of human inputs.

The lab showed off several applications that take advantage of the technology, like a virtual microscope being worked on in conjunction with UPenn that lets users�namely, doctors�zoom-in on microscope slides using hand gestures, like pinch zoom and double-tap. Another application lets users load a variety of music instruments, like traditional and experimentally-designed pianos, and play that simultaneously.

See videos of the multi-touch display by following this link.

Pulse2

Students at MET-Lab wanted to create a real-time version of games like Guitar Hero and Rock Band, one that dynamically changes to song changes, based on recorded beats, melodies and other qualities, using spectrum analysis.

The lab paired up with Drexel’s game design department to create Pulse2, a multi-platform Adobe Air game design. The result is a fast-paced and fun game that reacts to song dynamics, changing the pace of the gameplay, the number of enemies and the shapes and colors seen on the screen.

MoodSwings

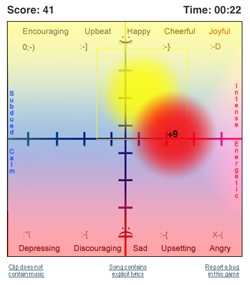

MET-Lab is actively collecting data from people with its MoodSwings collaborative music tracking game to help assemble a collection of data on mood categorization in music tracks.

Anyone can login and be automatically paired with an anonymous person. Both parties then listen to 30 seconds of a track, setting their mood�from happy to sad, calm to energetic and all areas in between. When the users agree, more points are scored. The data is being used guage how people react to shifts in music and to songs overall.

Join the conversation!

Find news, events, jobs and people who share your interests on Technical.ly's open community Slack

Philly daily roundup: Student-made college cost app; Central High is robotics world champ; Internet subsidy expiration looms

Philly daily roundup: Earth Day glossary; Gen AI's energy cost; Biotech incubator in Horsham

Edtech CEO looks back on the promises of summer 2020: 'It never rang true to me'