With artificial intelligence use gearing up throughout government agencies, people at its forefront are reckoning with it by balancing risk and opportunity.

The District of Columbia navigates this dynamic while actively introducing and developing ways for residents to use AI in the day-to-day. Stephen Miller, the city’s acting chief technology officer, assured that DC itself is “adequately prepared” to use AI transparently, ethically and safely.

“We’ve done everything we can for the last couple years to understand the data,” Miller said on a recent panel of experts hosted by DC Tech Meetup. “We have everything ready on the back end. We’re ready for implementing AI.”

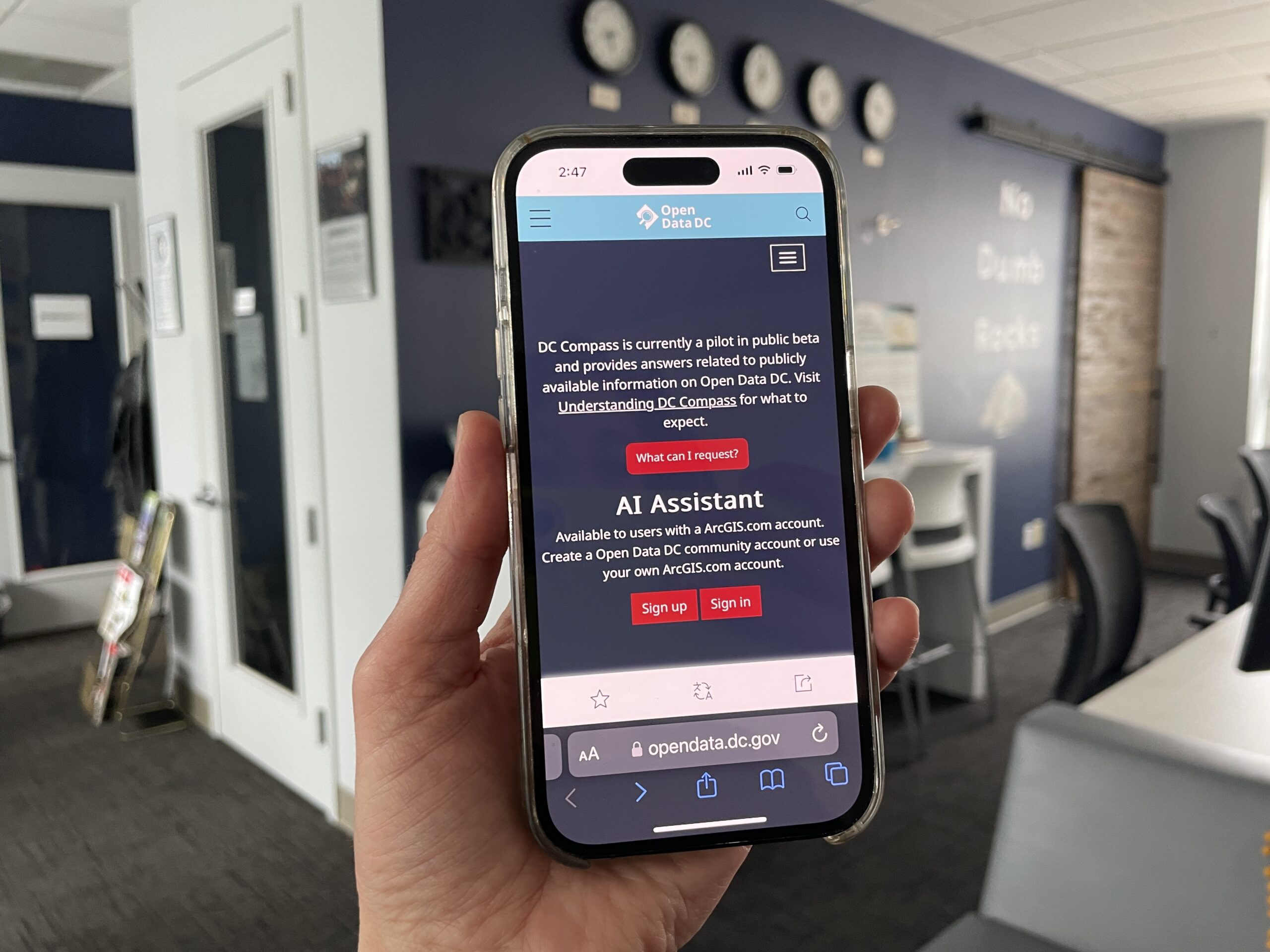

DC Compass, an AI chatbot aiming to help users better navigate the city’s open data, launched in the spring. Miller said it aligns with DC’s AI Values, a part of a broader strategic plan to make sure local government’s different AI use cases have a clear benefit and are safe and transparent.

“It allows everyone to be a data scientist, as long as you know how to ask a question and type it up,” Miller said. “It’s an amazing product.”

This panel about using AI in the public sector came as workers in the public sector across the country are requesting more transparency and regulation. The AFSCME, the largest trade union of public sector workers, voted to demand more AI-related policies and guidelines over the summer.

But other panelists did not embrace the public sector’s readiness as eagerly as Miller. As a whole, entities are en route to AI use preparation, but how much so depends on factors like staff size and available talent, said Mia Hoffmann, an AI researcher at Georgetown University’s Center for Security and Emerging Technology. Some entities are farther along than others.

“It’s a process,” Hoffmann said, “and it’s a spectrum of readiness.”

Aaron Wilkowitz, a solutions engineer in the public sector division of the tech giant OpenAI, said he’s “excited” about the public sector beginning to use AI, but noted the work still to be done.

“There’s a range of preparedness that we see across different agencies and different groups, but generally, the public sector is on its way to preparedness,” Wilkowitz said.

In an aim to better understand risk and to work with the government, OpenAI is working with the National Institute of Science and Technology out of the Department of Commerce to conduct AI safety research. Wilkowitz also brought up OpenAI’s Model Spec, a document built in collaboration with public and private sector leaders, that outlines ideal AI model behavior.

There are benefits, Wilkowitz assured, like using the tech for language translation. For example, he said OpenAI worked with the state of Minnesota to translate government documents into languages like Hmong and Somali for which it would be difficult to find a translator.

“It’s an opportunity for us to make government services accessible to every resident,” Wilkowitz said.

With that in mind, Miller said he’s focused on equitable access to tools and upskilling workers in DC. Using investments from the Bipartisan Infrastructure Law, Miller said he’s working on developing certification programs in AI for public sector workers.

Moreover, Miller was adamant that deploying AI will not have a “negative impact” on the workforce in DC.

When disseminating and developing AI through different agencies and, leaders must adhere to established guidelines and take people’s concerns seriously, said Andrew Gamino-Cheong, the cofounder and chief technology officer of the AI governance strategy software company Trustible.

Also, they must be transparent about mistakes, he said.

“If you’re more transparent, if you’re showing me the model spec or system problems,” Gamino-Cheong said, “that’ll actually do probably more to drive this idea of responsible AI.”

Before you go...

Please consider supporting Technical.ly to keep our independent journalism strong. Unlike most business-focused media outlets, we don’t have a paywall. Instead, we count on your personal and organizational support.

3 ways to support our work:- Contribute to the Journalism Fund. Charitable giving ensures our information remains free and accessible for residents to discover workforce programs and entrepreneurship pathways. This includes philanthropic grants and individual tax-deductible donations from readers like you.

- Use our Preferred Partners. Our directory of vetted providers offers high-quality recommendations for services our readers need, and each referral supports our journalism.

- Use our services. If you need entrepreneurs and tech leaders to buy your services, are seeking technologists to hire or want more professionals to know about your ecosystem, Technical.ly has the biggest and most engaged audience in the mid-Atlantic. We help companies tell their stories and answer big questions to meet and serve our community.

Join our growing Slack community

Join 5,000 tech professionals and entrepreneurs in our community Slack today!

The person charged in the UnitedHealthcare CEO shooting had a ton of tech connections

From rejection to innovation: How I built a tool to beat AI hiring algorithms at their own game

Where are the country’s most vibrant tech and startup communities?